Nowadays information theory is still based on the work of Shannon, who simply studied the effect of noise in the transmission line of messages. At the time of Shannon noise was a major problem, since message transmission was analog. Nowadays noise is irrelevant for message transmission.

Shannon explicitly excluded the meaning of the message from his considerations. So still today computers do not “understand” what they are seeing or what they are supposed to do, and concepts like “understanding”, “meaning”, “algorithm” are not even defined in Shannon theory.

And there is no physics variable to be found in information theory – the “information” of Shannon is not identical to the thermodynamic entropy (the information contained in your emails does not depend on the temperature of your office) – contrary to what is often said.

Like in all other fields of physics, a physics of information must start from a mathematical structure and connect objects of the physics world to the mathematical structure. For particular problems, the physics of information can be based on vector algebra, for others on Boolean Algebra, for instance. A general mathematical framework for discussing all instances of information processing in a unified way is given by category theory.

A relativistic theory results: the relevant properties of messages are defined only with respect to the respective message transformations. For instance, two messages are identical in their meanings, if they are connected through an injective transformation.

As a consequence, even the seemingly complicated problem of image recognition becomes algebraic: one can calculate the meaning of an image in the same way as one can calculate the result of some numerical function.

And the seemingly different fields of AI and neuroscience are united into one: the mirror neurons as well as the Hale Berry neurons are obvious and necessary in a physics framework, they cannot be explained in terms of Shannon theory, Also robotic brains are based on mirror neurons.

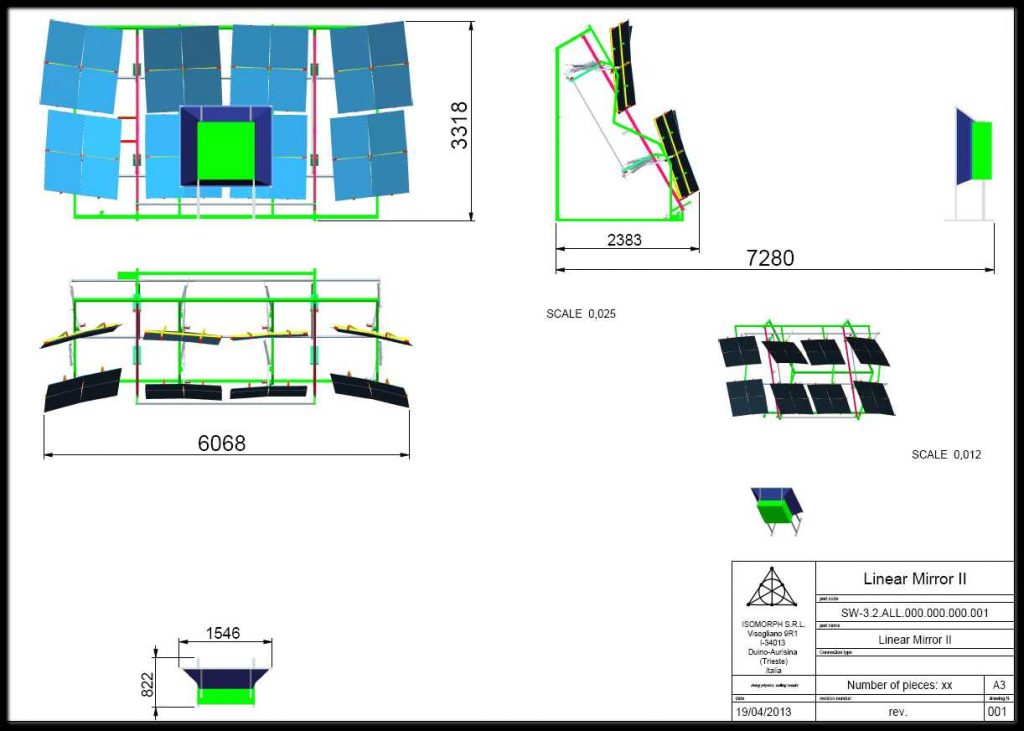

The Linear Mirror is an application of the physics of information: it is based on a generalization of Schoenfinkels finding, according to which there is no fundamental difference between a function of one or several variables (Einstein appreciated that). The Linear Mirror shows, that there is also no difference between a function with one or several output variables – its just a question of representation (said differently, the Linear Mirror performs parallel computing beyond quantum computing).

Based on the physics of information, some prototype robotic brains have been built 15 years ago, they worked very well. But the experts of traditional IT did not want to hear about physics, hoping to make progress without.

The failure of the AI departments of leading big companies over the last decade (with billions of dollars lost), as well as the war in Ukraine – where still human beings have to sacrificy their lives (instead of sending robots) in order to fend off the ignorants – suggest, that one should take up again the physics of information.

We are looking for investors or funding.